Outside this important special case, the R squared can take negative values. Usually, these definitions are equivalent in the special, but important case in which the linear regression includes a constant among its regressors. Before defining the R squared of a linear regression, we warn our readers that several slightly different definitions can be found in the literature. The R squared of a linear regression is a statistic that provides a quantitative answer to these questions. Whenever you have one variable that is ruining the model, you should not use this model altogether.

- Many pseudo R-squared models have been developed for such purposes (e.g., McFadden’s Rho, Cox & Snell).

- We were penalized for adding an additional variable that had no strong explanatory power.

- Thus, you’ll have less time for studying and probably get lower grades.

- All else being equal, a model that explained 95% of the variance is likely to be a whole lot better than one that explains 5% of the variance, and likely will produce much, much better predictions.

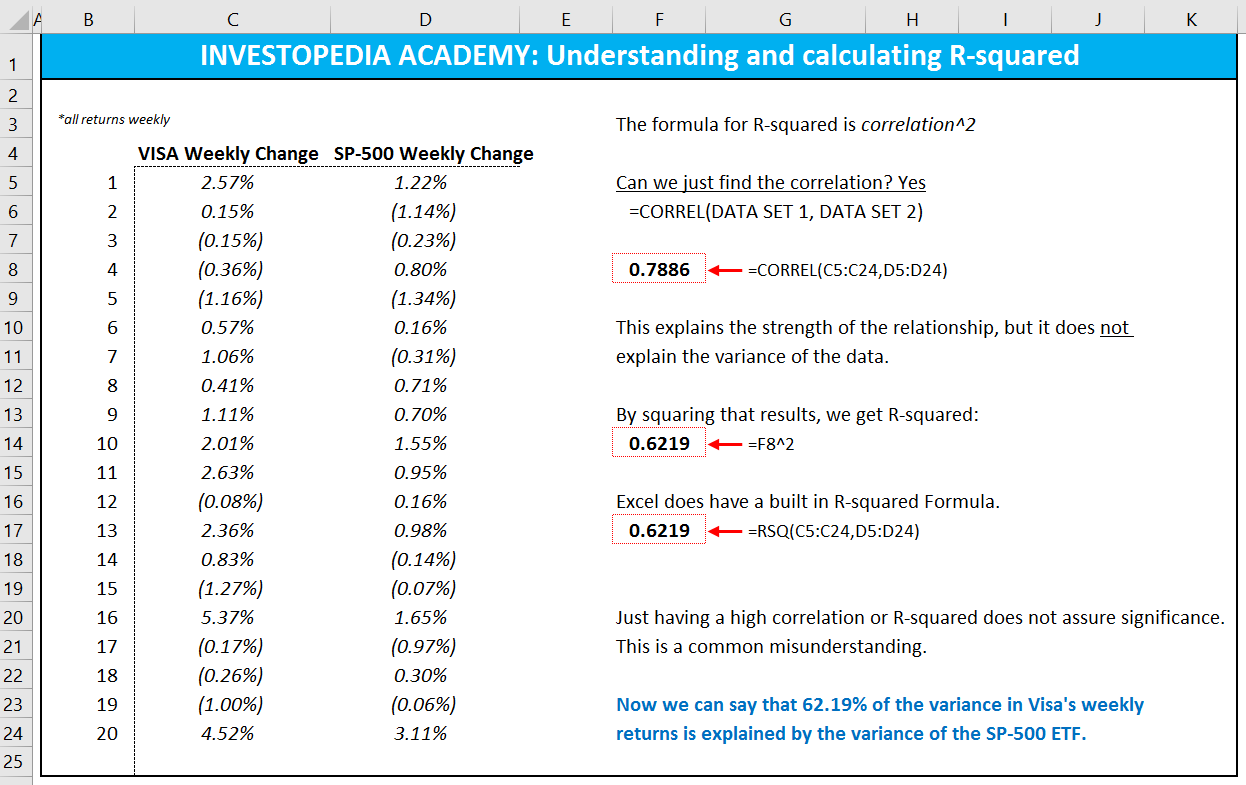

- R-squared will give you an estimate of the relationship between movements of a dependent variable based on an independent variable’s movements.

Replies to “How to Interpret Adjusted R-Squared (With Examples)”

It may depend on your household income (including your parents and spouse), your education, years of experience, country you are living in, and languages you speak. However, this may still account for less than 50% of the variability of income. A key highlight from that decomposition is that the smaller the regression error, the better the regression. In investing, a high R-squared, from 85% to 100%, indicates that the stock’s or fund’s performance moves relatively in line with the index. A fund with a low R-squared, at 70% or less, indicates that the fund does not generally follow the movements of the index.

Interpreting R²: a Narrative Guide for the Perplexed

There is a huge range ofapplications for linear regression analysis in science, medicine, engineering,economics, finance, marketing, manufacturing, sports, etc.. In some situationsthe variables under consideration have very strong and intuitively obviousrelationships, while in other situations you may be looking for very weaksignals in very noisy data. Thedecisions that depend on the analysis could have either narrow or wide marginsfor prediction error, and the stakes could be small or large.

ArE LOW R-SQUARED VALUES INHERENTLY BAD?

The only scenario in which 1 minus something can be higher than 1 is if that something is a negative number. But here, RSS and TSS are both sums of squared values, that is, sums of positive values. A low R-squared is most problematic when how do you interpret r squared you want to produce predictions that are reasonably precise (have a small enough prediction interval). Well, that depends on your requirements for the width of a prediction interval and how much variability is present in your data.

In general, a model fits the data well if the differences between the observed values and the model’s predicted values are small and unbiased. In fact, if we display the models introduced in the previous section against the data used to estimate them, we see that they are not unreasonable models in relation to their training data. In fact, R² values for the training set are, at least, non-negative (and, in the case of the linear model, very close to the R² of the true model on the test data).

Using R-squared effectively in regression analysis involves a balanced approach that considers the metric’s limitations and complements it with other statistical measures. When communicating R-squared findings, especially to non-technical audiences, focus on simplification and contextualization, ensuring the interpretations are both accurate and accessible. The more factors we include in our regression, the higher the R-squared.

My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations. But being able to mechanically make the variance of the residuals small by adjusting does not mean that the variance of the errors of the regression is as small. When the number of regressors is large, the mere fact of being able to adjust many regression coefficients allows us to significantly reduce the variance of the residuals. This definition is equivalent to the previous definition in the case in which the sample mean of the residuals is equal to zero (e.g., if the regression includes an intercept). It is possible to prove that the R squared cannot be smaller than 0 if the regression includes a constant among its regressors and is the OLS estimate of (in this case we also have that ).

However, it doesn’t tell you whether your chosen model is good or bad, nor will it tell you whether the data and predictions are biased. R-squared values range from 0 to 1 and are commonly stated as percentages from 0% to 100%. An R-squared of 100% means that all of the movements of a security (or another dependent variable) are completely explained by movements in the index (or whatever independent variable you are interested in). To calculate the total variance (or total variation), you would subtract the average actual value from each of the actual values, square the results, and sum them. This process helps in determining the total sum of squares, which is an important component in calculating R-squared.

If are really attached to the original definition, we could, with a creative leap of imagination, extend this definition to covering scenarios where arbitrarily bad models can add variance to your outcome variable. The inverse proportion of variance added by your model (e.g., as a consequence of poor model choices, or overfitting to different data) is what is reflected in arbitrarily low negative values. While a higher R2 suggests a stronger relationship between variables, smaller R-squared values will still hold relevance, especially for multifactorial clinical outcomes.